- Consider a modification to the activity-selection problem in which each activity $a_i$ has, in addition to a start and finish time, a value $v_i$. The objective is no longer to maximize the number of activities scheduled, but instead to maximize the total value of the activities scheduled. That is, we wish to choose a set $A$ of compatible activities such that $\sum_{a_k \in A} v_k$ is maximized. Give a polynomial-time algorithm for this problem.

Solution:

Easy and straightforward solution is to run a dynamic programming solution based on the the following equation: \(c[i, j] = \begin{cases} 0 & \text{if } S_{ij} = \emptyset, \\ \max(c[i, k] + c[k, j] + v_i : a_k \in S_{ij}) &\text{if } S_{ij} \ne \emptyset. \end{cases}\)

Since the subproblems are still indexed by a pair of activities, and each calculation requires taking the minimum over some set of size $\le { S_{ij}{ \in O(n)$. The total runtime is bounded by $O(n^3)$.

However, if we are cunning a little, we can be more efficient and give the algorithm which runs in $O(n\log n)$. INPUT: $n$ activities with values.

IDEA OF ALGORITHM:

- Sort input vector of activities according their finish times in ascending order. Let us denote the activities in this sorted vector by $(a_0, a_1, \dots, a_{n - 1})$. -For each $0 \le i < n$ construct partial solution $S_i$. By a partial solution $S_i$, we mean a solution to the problem but considering only activities with indexes lower or equal to $i$. Remember value of each partial solution.

- Clearly $S_0 = {a_0}$.

- We can construct $S_{i + 1}$ as follows. Possible values of $S_{i + 1}$ is either $S_i$ or the solution obtained by joining the activity $a_{i + 1}$ with partial solution $S_j$ where $j < i + 1$ is the index of activity such that $a_j$ is compatible with $a_{i + 1}$ but $a_{j + 1}$ is not compatible with $a_{i + 1}$. Pick the one of these two possible solutions, which has greater value. Ties can be resolved arbitrarily.

- Therefore we can construct partial solutions in order $S_0, S_1, \dots, S_{n - 1}$ using (3) for $S_0$ and (4) for all the others.

- Give $S_{n - 1}$ as the solution for problem.

ANALYSIS OF TIME COMPLEXITY:

- Sorting of activities can be done in $O(n\log n)$ time.

- Finding the value of $S_0$ is in $O(1)$.

- Any $S_{i + 1}$ can be found in $O(\log n)$. It is thanks to the fact that we have properly sorted activities. Therefore we can for each $i + 1$ find the proper $j$ in $O(\log n)$ using the binary search. If we have the proper $j$, the rest can be done in $O(1)$.

- Therefore, we have $O(n\log n)$ time for constructing of all $S_i$’s.

IMPLEMENTATION DETAILS:

- Use the dynamic programming.

- It is important not to remember too much for each $S_i$. Do not construct $S_i$’s directly (you can end up in $\Omega(n^2)$ time if you do so). For each $S_i$ it is sufficient to remember:

- it’s value

- whether or not it includes the activity $a_i$

- the value of $j$ (from (4)).

- Using these information obtained by the run of described algorithm you can reconstruct the solution in $O(n)$ time, which does not violate final time complexity.

PROOF OF CORRECTNESS: (sketched)

- Clearly, $S_0 = {a_0}.$

- For $S_{i + 1}$ we argue by (4). Partial solution $S_{i + 1}$ either includes the activity $a_{i + 1}$ or doesn’t include it, there is no third way.

- If it does not include $a_{i + 1}$, then clearly $S_{i + 1} = S_i$.

- If it includes $a_{i + 1}$, then $S_{i + 1}$ consists of $a_{i + 1}$ and partial solution which uses all activities compatible with $a_{i + 1}$ with indexes lower than $i + 1$. Since activities are sorted according their finish times, activities with indexes $j$ and lower are compatible and activities with index $j + 1$ and higher up to $i + 1$ are not compatible. We do not consider all the other activities for $S_{i + 1}$. Therefore setting $S_{i + 1} = {a_{i + 1}} \cup S_j$ gives correct answer in this case. The fact that we need $S_j$ and not some other solution for activities with indexes up to $j$ can be easily shown by the standard cut-and-paste argument.

- Since for $S_{n - 1}$ we consider all of the activities, it is actually the solution of the problem.

- Show how to solve the fractional knapsack problem in $O(n)$ time.

Solution:

First compute the value of each item, defined to be it’s worth divided by its weight. We use a recursive approach as follows, find the item of median value, which can be done in linear time as shown in chapter 9. Then sum the weights of all items whose value exceeds the median and call it $M$. If $M$ exceeds $W$ then we know that the solution to the fractional knapsack problem lies in taking items from among this collection. In other words, we’re now solving the fractional knapsack problem on input of size $n / 2$. On the other hand, if the weight doesn’t exceed $W$, then we must solve the fractional knapsack problem on the input of $n / 2$ low-value items, with maximum weight $W − M$. Let $T(n)$ denote the runtime of the algorithm. Since we can solve the problem when there is only one item in constant time, the recursion for the runtime is $T(n) = T(n / 2) + cn$ and $T(1) = d$, which gives runtime of $O(n)$.

- The transpose of a directed graph $G = (V, E)$ is the graph $G^\text T = (V, E^\text T)$, where $E^\text T = {\{ (v, u) \in V \times V: (u, v) \in E }\}$. Thus, $G^\text T$ is $G$ with all its edges reversed. Describe efficient algorithms for computing $G^\text T$ from $G$, for both the adjacency-list and adjacency-matrix representations of $G$. Analyze the running times of your algorithms.

Solution:

Adjacency-list representation

Assume the original adjacency list is $Adj$.

let Adj'[1..|V|] be a new adjacency list of the transposed G^T

for each vertex u ∈ G.V

for each vertex v ∈ Adj[u]

INSERT(Adj'[v], u)

Time complexity: $O(| E| + |V|)$.

Adjacency-matrix representation

Transpose the original matrix by looking along every entry above the diagonal, and swapping it with the entry that occurs below the diagonal.

Time complexity: $O(| V|^2)$.

- What is the running time of $\text{BFS}$ if we represent its input graph by an adjacency matrix and modify the algorithm to handle this form of input?

Solution: The time of iterating all edges becomes $O(V^2)$ from $O(E)$. Therefore, the running time is $O(V + V^2) = O(V^2)$.

- The diameter of a tree $T = (V, E)$ is defined as $\max_{u,v \in V} {\{ \delta(u, v) }\}$, that is, the largest of all shortest-path distances in the tree. Give an efficient algorithm to compute the diameter of a tree, and analyze the running time of your algorithm.

Solution:

Suppose that a and b are the endpoints of the path in the tree which achieve the diameter, and without loss of generality assume that $a$ and $b$ are the unique pair which do so. Let $s$ be any vertex in $T$. We claim that the result of a single $\text{BFS}$ will return either $a$ or $b$ (or both) as the vertex whose distance from $s$ is greatest.

To see this, suppose to the contrary that some other vertex $x$ is shown to be furthest from $s$. (Note that $x$ cannot be on the path from $a$ to $b$, otherwise we could extend). Then we have \(d(s, a) < d(s, x)\) and \(d(s, b) < d(s, x).\) Let $c$ denote the vertex on the path from $a$ to $b$ which minimizes $d(s, c)$. Since the graph is in fact a tree, we must have \(d(s, a) = d(s, c) + d(c, a)\) and \(d(s, b) = d(s, c) + d(c, b).\) (If there were another path, we could form a cycle). Using the triangle inequality and inequalities and equalities mentioned above we must have \(\begin{aligned} d(a, b) + 2d(s, c) & = d(s, c) + d(c, b) + d(s, c) + d(c, a) \\ & < d(s, x) + d(s, c) + d(c, b). \end{aligned}\) I claim that $d(x, b) = d(s, x) + d(s, b)$. If not, then by the triangle inequality we must have a strict less-than. In other words, there is some path from $x$ to $b$ which does not go through $c$. This gives the contradiction, because it implies there is a cycle formed by concatenating these paths. Then we have \(d(a, b) < d(a, b) + 2d(s, c) < d(x, b).\)

Since it is assumed that $d(a, b)$ is maximal among all pairs, we have a contradiction. Therefore, since trees have $|V| - 1$ edges, we can run $\text{BFS}$ a single time in $O(V)$ to obtain one of the vertices which is the endpoint of the longest simple path contained in the graph. Running $\text{BFS}$ again will show us where the other one is, so we can solve the diameter problem for trees in $O(V)$.

- Given a graph $G$ and a minimum spanning tree $T$, suppose that we decrease the weight of one of the edges not in $T$. Give an algorithm for finding the minimum spanning tree in the modified graph.

Solution: If we were to add in this newly decreased edge to the given tree, we would be creating a cycle. Then, if we were to remove any one of the edges along this cycle, we would still have a spanning tree. This means that we look at all the weights along this cycle formed by adding in the decreased edge, and remove the edge in the cycle of maximum weight. This does exactly what we want since we could only possibly want to add in the single decreased edge, and then, from there we change the graph back to a tree in the way that makes its total weight minimized.

- Let $G = (V, E)$ be a weighted, directed graph with weight function $w : E \rightarrow \mathbb R$. Give an $O(VE)$-time algorithm to find, for each vertex $v \in V$, the value $\delta^*(v) = \min_{u \in V} {\{\delta(u, v)}\}$.

- Hint: Modify the Bellman-Ford algorithm.

Solution:

RELAX(u, v, w)

if v.d > min(w(u, v), w(u, v) + u.d)

v.d = min(w(u, v), w(u, v) + u.d)

v.π = u.π

- Let $Ax \le b$ be a system of $m$ difference constraints in $n$ unknowns. Show that the Bellman-Ford algorithm, when run on the corresponding constraint graph, maximizes $\sum_{i = 1}^n x_i$ subject to $Ax \le b$ and $x_i \le 0$ for all $x_i$.

Solution:

Bellman-Ford correctly solves the system of difference constraints so $Ax \le b$ is always satisfied. We also have that $x_i = \delta(v_0, v_i) \le w(v_0, v_i) = 0$ so $x_i \le 0$ for all $i$. To show that $\sum x_i$ is maximized, we’ll show that for any feasible solution $(y_1, y_2, \ldots, y_n)$ which satisfies the constraints we have $yi \le \delta(v_0, v_i) = x_i$. Let $v_0, v_{i_1}, \ldots, v_{i_k}$ be a shortest path from $v_0$ to $v_i$ in the constraint graph. Then we must have the constraints $y_{i_2} - y_{i_1} \le w(v_{i_1}, v_{i_2}), \ldots, y_{i_k} - y_{i_{k - 1}} \le w(v_{i_{k - 1}},v_{i_k})$. Summing these up we have \(y_i \le y_i - y_1 \le \sum_{m = 2}^k w(v_{i_m}, v_{i_{m - 1}}) = \delta(v_0, v_i) = x_i.\)

- Show that the Bellman-Ford algorithm, when run on the constraint graph for a system $Ax \le b$ of difference constraints, minimizes the quantity $(\max{x_i} - \min{x_i})$ subject to $Ax \le b$. Explain how this fact might come in handy if the algorithm is used to schedule construction jobs.

Solution:

We can see that the Bellman-Ford algorithm run on the graph whose construction is described in this section causes the quantity $\max{x_i} - \min{x_i}$ to be minimized. We know that the largest value assigned to any of the vertices in the constraint graph is a $0$. It is clear that it won’t be greater than zero, since just the single edge path to each of the vertices has cost zero. We also know that we cannot have every vertex having a shortest path with negative weight. To see this, notice that this would mean that the pointer for each vertex has it’s $p$ value going to some other vertex that is not the source. This means that if we follow the procedure for reconstructing the shortest path for any of the vertices, we have that it can never get back to the source, a contradiction to the fact that it is a shortest path from the source to that vertex.

Next, we note that when we run Bellman-Ford, we are maximizing $\min{x_i}$. The shortest distance in the constraint graphs is the bare minimum of what is required in order to have all the constraints satisfied, if we were to increase any of the values we would be violating a constraint.

This could be in handy when scheduling construction jobs because the quantity $\max{x_i} - \min{x_i}$ is equal to the difference in time between the last task and the first task. Therefore, it means that minimizing it would mean that the total time that all the jobs takes is also minimized. And, most people want the entire process of construction to take as short of a time as possible.

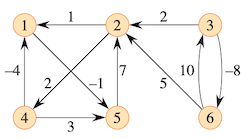

- Run the Floyd-Warshall algorithm on the weighted, directed graph given below. Show the matrix $D^{(k)}$ that results for each iteration of the outer loop.

Solution:

$k = 1$:

$$ \begin{pmatrix} 0 & \infty & \infty & \infty & -1 & \infty \\ 1 & 0 & \infty & 2 & 0 & \infty \\ \infty & 2 & 0 & \infty & \infty & -8 \\ -4 & \infty & \infty & 0 & -5 & \infty \\ \infty & 7 & \infty & \infty & 0 & \infty \\ \infty & 5 & 10 & \infty & \infty & 0 \end{pmatrix} $$

$k = 2$:

$$ \begin{pmatrix} 0 & \infty & \infty & \infty & -1 & \infty \\ 1 & 0 & \infty & 2 & 0 & \infty \\ 3 & 2 & 0 & 4 & 2 & - 8 \\ -4 & \infty & \infty & 0 & -5 & \infty \\ 8 & 7 & \infty & 9 & 0 & \infty \\ 6 & 5 & 10 & 7 & 5 & 0 \end{pmatrix} $$

$k = 3$:

$$ \begin{pmatrix} 0 & \infty & \infty & \infty & -1 & \infty \\ 1 & 0 & \infty & 2 & 0 & \infty \\ 3 & 2 & 0 & 4 & 2 & -8 \\ -4 & \infty & \infty & 0 & -5 & \infty \\ 8 & 7 & \infty & 9 & 0 & \infty \\ 6 & 5 & 10 & 7 & 5 & 0 \end{pmatrix} $$

$k = 4$:

$$ \begin{pmatrix} 0 & \infty & \infty & \infty & -1 & \infty \\ -2 & 0 & \infty & 2 & -3 & \infty \\ 0 & 2 & 0 & 4 & -1 & -8 \\ -4 & \infty & \infty & 0 & -5 & \infty \\ 5 & 7 & \infty & 9 & 0 & \infty \\ 3 & 5 & 10 & 7 & 2 & 0 \end{pmatrix} $$

$k = 5$:

$$ \begin{pmatrix} 0 & 6 & \infty & 8 & -1 & \infty \\ -2 & 0 & \infty & 2 & -3 & \infty \\ 0 & 2 & 0 & 4 & -1 & -8 \\ -4 & 2 & \infty & 0 & -5 & \infty \\ 5 & 7 & \infty & 9 & 0 & \infty \\ 3 & 5 & 10 & 7 & 2 & 0 \end{pmatrix} $$

$k = 6$:

$$ \begin{pmatrix} 0 & 6 & \infty & 8 & -1 & \infty \\ -2 & 0 & \infty & 2 & -3 & \infty \\ -5 & -3 & 0 & -1 & -6 & -8 \\ -4 & 2 & \infty & 0 & -5 & \infty \\ 5 & 7 & \infty & 9 & 0 & \infty \\ 3 & 5 & 10 & 7 & 2 & 0 \end{pmatrix} $$